2024

Neural style transfer blends the content of one image with the artistic style of another—a fascinating intersection of art and deep learning that I have wanted to dive into for quite some time. For this side project, I implemented the original neural style transfer paper in a Jupyter notebook to dive deeper into the techniques involved. To make the project more interactive and accessible, I built a demo app using Gradio, allowing users to apply artistic styles to their own images directly from a web interface.

2024

In 2024, I focused on adapting to my new role, dedicating time to developing soft skills like business analysis. I also started diving deeper into MLOps, working with MLflow and its integration in Databricks to manage model experiments and deployment. This blend of expanding technical expertise and sharpening analytical skills has been a key part of my professional growth this year.

2023

Working with image captioning models is an exciting area in deep learning. As one of my side-projects to get hands-on experience with Huggingface I aimed to finetune the ViT-GPT-2 model for image captioning. However, due to limited server space availability caused by ongoing commercial projects, I wasn't able to carry out the actual finetuning process. Nonetheless, I explored the model and wrote code to use and finetune the model with the HuggingFace transformers library. For the general image captioning I also created a demo application that can run using a desktop GPU.

2023

During my studies on Proximal Policy Optimization (PPO), I implemented the algorithm from scratch and trained agents to play Atari games using the Gym environment. PPO is a popular reinforcement learning algorithm known for its stability and sample efficiency. This project allowed me to gain hands-on experience with PPO and understand its inner workings.

2023

In order to work effectively with transformers, HuggingFace is an indispensable platform. It offers a wide range of resources including datasets, pretrained models, courses, and more, specifically tailored for transformer-based models. To enhance my understanding and expertise in this area, I embarked on a project to develop an arXiv summarizer utilizing HuggingFace.

2023

The Deep Reinforcement Learning course organized by HuggingFace provided a comprehensive journey, starting from the fundamentals we quickly delved into more advanced topics such as policy-gradient methods and competitive multi-agent environments. The course not only refreshed my RL knowledge but also introduced me to new concepts and techniques. And to be honest, it was also great fun.

2023

When working with transformers, HuggingFace is an indispensable platform. It offers a wide range of resources including datasets, pretrained models, courses, and more, specifically tailored for transformers. In my pursuit to dive deeper into transformers, I embarked on various projects utilizing HuggingFace to gain hands-on experience. The Reddit TLDR project is one of several projects I created to explore the capabilities of transformers by summarizing Reddit posts.

2023

One of my personal study goals going into 2023 was to get a better understanding of transformers, both theoretically and practically. In this repository, I implemented the transformer architecture, as presented in the paper "Attention is all you need," from scratch in PyTorch. By building the transformer model myself, I gained a deep understanding of the attention mechanisms, positional encodings, and feed-forward networks employed in transformers.

2022

In this repository, I developed a collection of small computer vision Python programs. These applications include a face detector and anonymizer, a smart document scanner that automatically crops, transforms, and enhances a document in a picture, an object tracker based on color, and a script that detects when someone's eyes are closed. This project served as a quick refresher for basic computer vision functionalities, and I'm happy to share the results.

2022

In this project, I implemented a simple deep learning framework from scratch to accompany my blog series of the same name. Each notebook is linked to a specific blog post, starting from the mathematics behind a forward pass and efficiently implementing custom neural networks. I then implemented the basic backpropagation algorithm for these custom networks and tested it on an example with dummy data. In the final part, I introduced techniques to further improve the training process, such as minibatch gradient descent, momentum, and RMSProp.

2021

This repository contains an implementation of the YOLOv3 network (pretrained) in PyTorch. The provided script reads a video, applies object detection, and displays the video with the detected objects highlighted. To modify the configuration and input video, you need to edit the code itself (yolo.py).

2020

This project was the main focus of my thesis. It involved developing a proof-of-concept unsupervised anomaly detection system for spacecrafts. However, the system can also be applied to monitor other systems with numerous sensors. The core of the system is based on an LSTM network that learns to predict future sensor observations. By comparing the actual observations with the predictions, the system can detect anomalies and rank the sensors based on their error. For training and testing, I had the privilege of using one year of sensor measurements from the Mars Express orbiter, provided by the European Space Agency. During the testing phase, the system demonstrated its ability to quickly identify deviations in single or multiple sensors.

2020

For this project, I recreated the self-organizing kilobot swarm from the WYSS Institute in the MASON environment. This swarm has the ability to arrange itself into any given shape, as long as the shape consists of a single piece. The individual bots build up a gradient field around seed bots, which they use to determine their location. By knowing their location, the bots can then determine when and where to join the desired shape. I extended this system by introducing the concept of bridge formation, enabling the bots to construct multiple shapes simultaneously. Although the current bridge-forming principle is not entirely stable, the project paper provides potential solutions to address this issue.

2020

This project served as a complementary component to the main project of my thesis, "Temporal modeling for safer spacecrafts" (2020). It involved developing a proof-of-concept unsupervised anomaly detection system trained on synthetic signals. The system is built upon an LSTM network that learns to predict future values of the signal. Anomalies are detected by comparing the predicted values with the actual observations using a threshold on the loss. By utilizing synthetic data, this system allowed for extensive testing of various aspects and capabilities. The system demonstrated proficiency in detecting deviations in the amplitude and frequency of the signal. However, it struggled to reliably detect the decay of a high-frequency component within a signal.

2019

I worked on this project while studying and exploring Long Short-Term Memory (LSTM) networks. The goal was to construct and train an LSTM network to generate text based on a given text file. However, at that time, I had limited knowledge about language models and didn't incorporate word embeddings. Instead, I made the model predict the next character given a sequence of 100 characters. As a result, the generated text may not have meaningful sentences, but it often produces amusing outputs. Despite its limitations, this project allowed me to gain hands-on experience with LSTMs and understand their capabilities and challenges.

2019

As part of my thesis (Reinforcement Learning: with applications to robotic control, 2019), I tested different versions of the Q-Learning algorithm in the OpenAI Gym environment. Reinforcement Learning enables an agent to learn and perform tasks through reinforcement of good behavior and punishment of bad behavior. In this project, the goal was to train an agent to play a video game like a human. The agent receives frames as input and needs to decide which button to press in order to gain points and avoid losing. In my thesis, I then applied this algorithm to a simulated robotic arm to teach it to pick up boxes.

2019

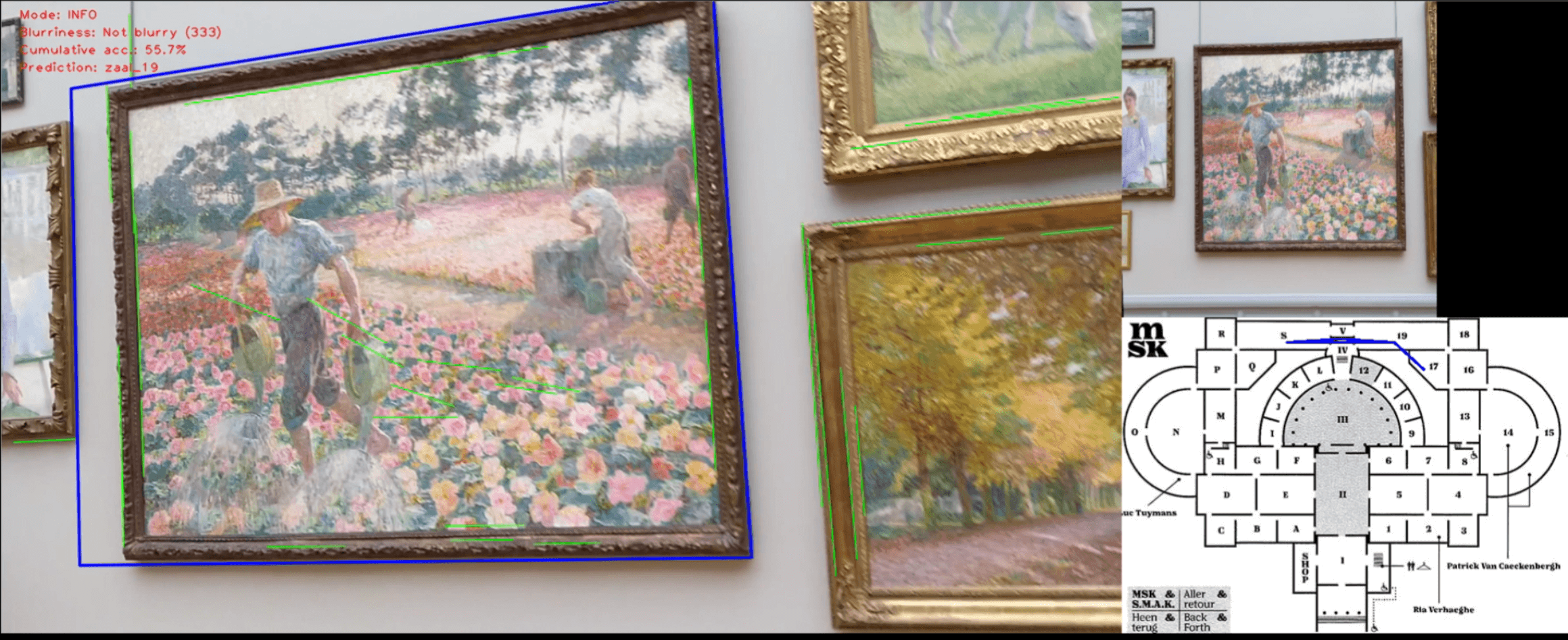

In this group project, we applied computer vision techniques to determine the location of a user inside a museum. Using a camera mounted on a smartphone or a fixed position, the system captured the user's view while they walked around the museum. The program detected paintings in the video frames and extracted them. By performing feature matching and leveraging known painting locations, the system determined the user's location within the museum. To improve accuracy, we eliminated estimated locations that were physically impossible, such as abrupt jumps across the museum between frames.